Microsoft Fabric: Lakehouse vs Data Warehouse

Table of Content

1. Introduction of Lakehouse & Data Warehouse

2. Understand to make a choice between Lakehouse vs Data Warehouse

3. Use Cases: Lakehouse and Data Warehouse

4. Solution Architectures/Designs for Data Infrastructure

A. Medallion Architecture

B. Lakehouse sample architecture

C. Data Warehouse Sample Architecture

D. Lakehouse and Data Warehouse combined architecture

5. Conclusion

Introduction of Lakehouse and Data Warehouse from

Microsoft Fabric

In Microsoft Fabric, you can experience multiple components such as Data Engineering, Data Factory, Data Warehouse, Data Science, Real-Time Analytics, and Power BI.

This article aims to help you understand the differences between a Lakehouse and a Data Warehouse, exploring their solution designs and applicable use cases. By doing so, it will guide you in optimizing your selection to harness the potential of both fabric components.

Lakehouse

Microsoft Fabric Lakehouse represents the data architecture platform designed to streamline storage, management, and analysis of structured, semi-structured, and unstructured data, all within a unified environment and in open format. Lakehouse allows you to perform transformations through Spark computes or leverage SQL endpoints for analysis and exploration of your data. Notably, Fabric Lakehouse adopts delta parquet as its default file format, ensuring optimal performance for analytical workloads.

You can store data in two physical locations that are provisioned automatically, files (unmanaged) or tables (managed delta tables).

Tables:

In a Lakehouse environment, a managed area designed for hosting tables of all formats in Spark including CSV, Parquet, or Delta. All tables, whether automatically generated or explicitly created, are recognized as tables in the Lakehouse. Additionally, any Delta tables, which are essentially Parquet data files with a file-based transaction log, are seamlessly integrated and treated as tables as well.

Files:

In a Lakehouse environment, an unmanaged area designed for storing data in any file format. Any Delta files stored in this area aren’t automatically identified as tables. If you want to create a table over a Delta Lake folder in the unmanaged area, you’ll need to explicitly create a shortcut or an external table with a location that points to the unmanaged folder that contains the Delta Lake files in Spark.

Data Warehouse

Microsoft Fabric Data Warehouse is a lake centric data warehouse built on an enterprise grade distributed processing engine. One of the major advantages of Fabric Data Warehouse compared to other data warehousing solutions is that there is no need to copy the data from a Data Warehouse for other compute engines or teams to consume since the warehouse uses OneLake as its storage. One single copy of the data stored in Microsoft OneLake in a delta parquet format. Because of this, you can have cross-database querying to leverage different sources like OneLake or other sources through shortcuts of data seamlessly and with zero data duplication.

At its core, the Data Warehouse is a SQL MPP Engine (massively parallel processing engine) equipped with Delta Tables and TDS endpoints, which will provide you with full T-SQL DDL and DML support, empowers you with robust capabilities for data manipulation and analysis.

Understand to make a choice between Lakehouse vs Data Warehouse

While both the Lakehouse and Data Warehouse are open data format delta parquet, lake centric approaches and have some cross functionality, but they also exhibit distinct differences that can be categorized as follows.

- Endpoints for Lakehouse and Data Warehouse

- Types of data stored; data organized by

- Developer skillset; Read/Write operations

- Dynamic Data Masking and Data Governance

A. Endpoints for Lakehouse and Data Warehouse

There are three different endpoints between each the Lakehouse and Data Warehouse.

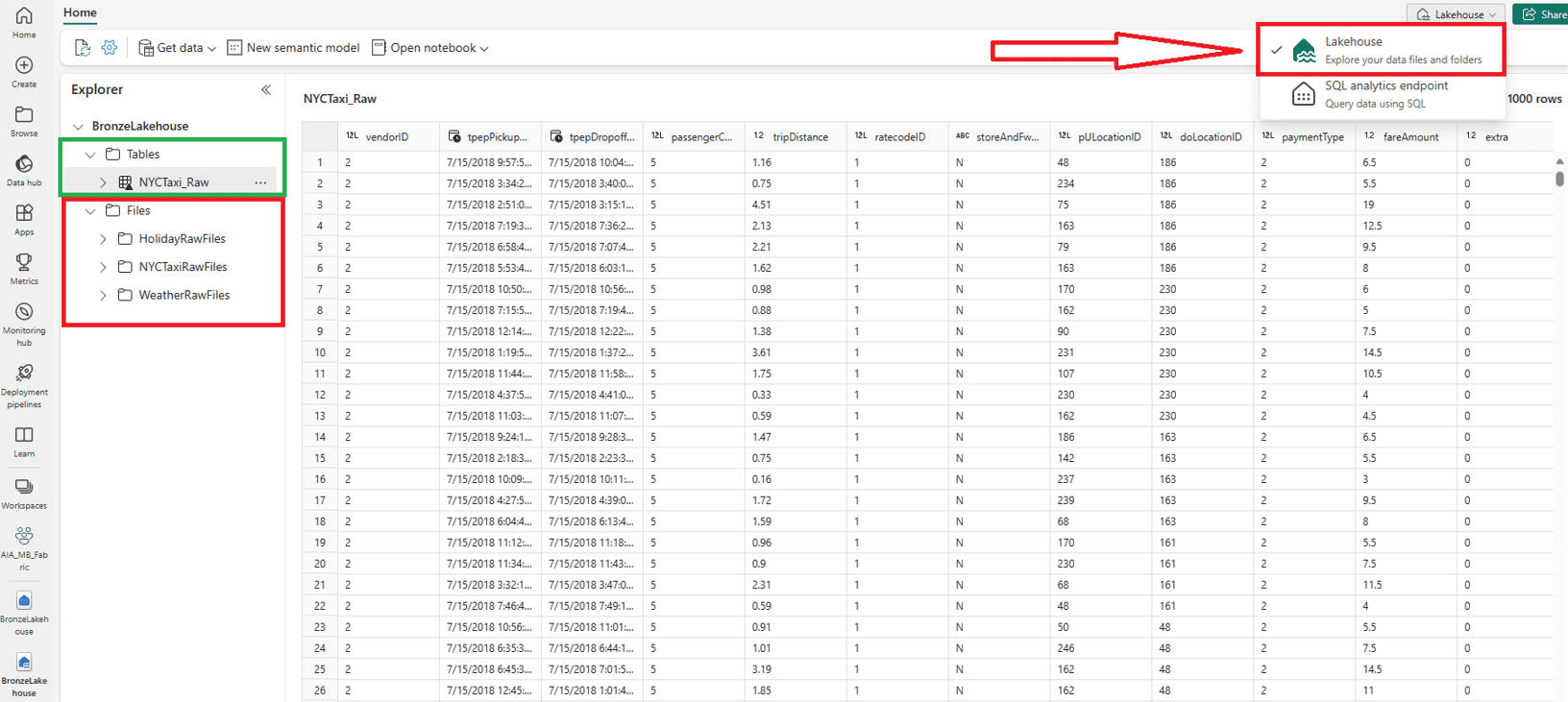

Lakehouse Endpoint for spark runtimes/libraries

To interact with files and tables within your Lakehouse for analysis, transformations, or processing using Spark, you’ll connect to the Lakehouse endpoint, different from the SQL Analytics Endpoint. You can connect using the URL, ABFS path, or by directly mounting the Lakehouse in your explorer. Spark provides flexibility, enabling write operations using Scala, PySpark, Spark SQL, or R. However, if you prefer using T-SQL, you’ll need to utilize the SQL Analytics Endpoint, where operations are limited to read-only.

You can easily check which endpoint you’re currently viewing via a drop-down selection option located in the top right corner, shown when expanded. Delta Tables and Files are available through the Lakehouse Endpoint.

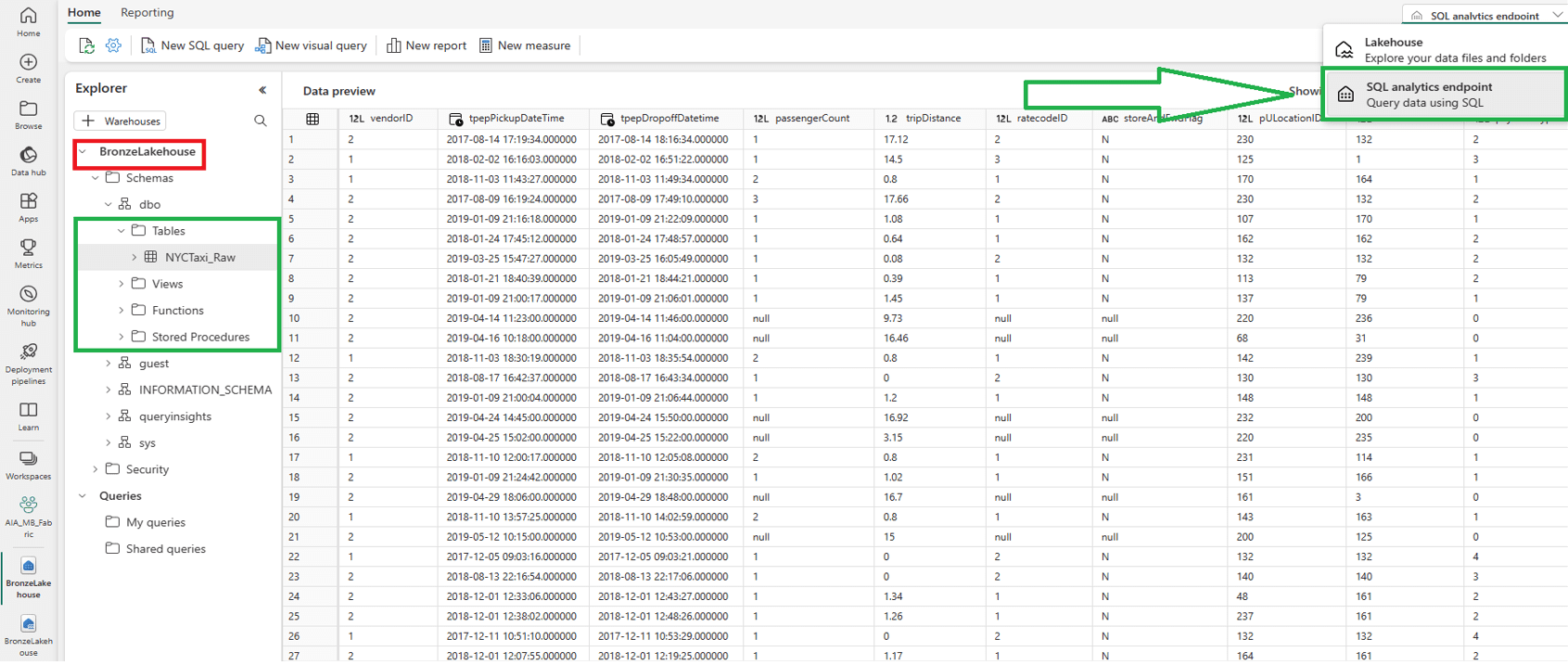

SQL Analytics Endpoint of the Lakehouse

The SQL Analytics Endpoint offers a SQL-based experience for accessing and querying delta tables within the Lakehouse architecture. It provides a read-only experience, exclusively focusing on delta tables. This would be the ‘Tables’ section of the Lakehouse, and the ‘Files’ section are not readable and discoverable through the SQL endpoint. This endpoint facilitates interactions such as querying, analysing data using T-SQL, creating views, saving functions, and implementing SQL object-level security. It enables data engineers to build a relational layer on top of physical data in the Lakehouse and expose it to analysis and reporting tools using the SQL connection string.

Notably, each Lakehouse within a workspace is automatically associated with a single SQL Analytics Endpoint, offering a consolidated point of access to delta table storage. Only delta tables are available through the SQL Analytics Endpoint.

Data Warehouse Endpoint

Data Warehouse Endpoint functions in a traditional SQL data warehouse manner. That means this endpoint supports the full T-SQL capabilities like your enterprise data warehouse.

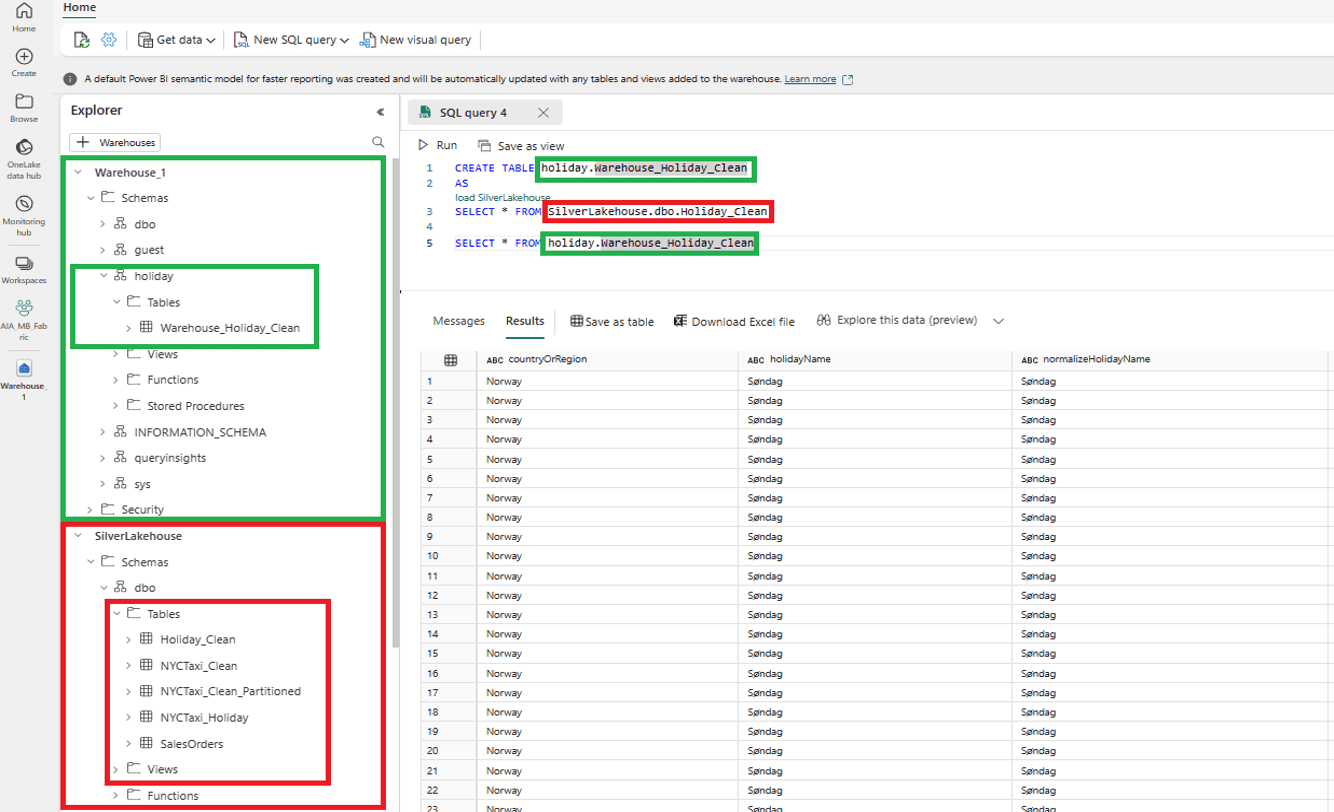

Unlike the SQL Analytics Endpoint, the Data Warehouse Endpoint offers you pairing the full read (using spark or T-SQL) and write (using only T-SQL) capabilities and the cross-database ingestion capabilities, you can ingest from multiple Data Warehouses or Lakehouses seamlessly. When ingesting data into the Data Warehouse, it will create a Delta Table that stored in OneLake.

The following example of a cross database query to load data into the Data Warehouse from the Lakehouse.

Warehouse table ‘holiday.Warehouse_Holiday_Clean’ is created and loaded with a CTAS statement with the Lakehouse delta table ‘SilverLakehouse.dbo.Holiday_Clean’ as the source.

B. Types of data stored; data organized by

Another contrast between the Lakehouse and Data Warehouse lies in the type of data that is stored and how it is organized.

Within Lakehouse Environment:

- You can store unstructured, semi-structured, or structured data.

- Data is organized using folders and files, lake databases, and delta tables.

Within Data Warehouse Environment:

- You can store only structured data.

- Data is organized into databases, schemas, and tables (with delta tables utilized behind the scenes).

C. Developer skillset; Read/Write operations

Within Lakehouse Environment:

- The primary skill is Spark (Scala, PySpark, Spark SQL, R) utilized for write operations and most of the workloads.

- There can be a secondary skill of T-SQL for read-only operations or analysis, facilitated by the SQL Analytics Endpoint on the Lakehouse.

- The interaction with the data will primarily be through Spark notebooks and Spark job definitions.

Within Data Warehouse Environment:

- The primary skill is SQL. This includes T-SQL and related SQL knowledge like data modeling, DDL/DML statements, SQL MPP engine understanding, SQL DBA knowledge etc.

- The interaction with the data will be through SQL scripts, Stored procedures, views, ad hoc queries, etc.

- You can read from the Data Warehouse with Spark, but it would not be used for consumption or serving.

D. Dynamic Data Masking and Data Governance

a. Dynamic Data Masking

Dynamic data masking limits sensitive data exposure by masking it to nonprivileged users. It prevents unauthorized viewing of sensitive data by enabling administrators to specify how much sensitive data to reveal, with minimal effect on the application layer.

Within Lakehouse Environment:

- Dynamic data masking is supported exclusively through the SQL analytics endpoint of the Lakehouse. Other file formats or engines like Spark won’t have access to dynamic data masking functionality. Object and row level security can also be applied, but only through the SQL Analytics Endpoint.

- Presently, Spark engines do not support object and row-level security. However, future integration with OneLake Security may change this.

Within Data Warehouse Environment:

- Dynamic data masking features are fully supported. This includes object-level (tables, views, functions, stored procedures, etc.), column-level, and row-level security, as well as DDL/DML operations.

- In addition to dynamic data masking, the data warehouse supports SQL granular permissions, column and row level security, and audit logs.

Here’s an example of dynamic data masking in a Fabric Data Warehouse:

Suppose we have a table called ‘Customer’ in Data Warehouse with the following columns:

- CustomerID

- FullName

- CreditCardNumber

We aim to mask the ‘CreditCardNumber’ column having sensitive credit card information by implementing a masking function (MASKED WITH) within T-SQL (DDL) scripts during table creation/alteration. This function replaces actual credit card numbers with masked values, ensuring unauthorized access prevention.

— Create or alter the table with masked CreditCardNumber column

ALTER TABLE ‘Customer’ (

— Other columns…

CreditCardNumber NVARCHAR (50) MASKED WITH (FUNCTION = ‘partial(0,”XXXX-XXXX-XXXX-“,4)’) NULL

);

For instance, table with masked CreditCardNumber column,

| CustomerID | FullName | CreditCardNumber | |

| 1 | John Doe | john.doe@example.com | XXXX-XXXX-XXXX-1234 |

| 2 | Jane Smith | jane.smith@example.com | XXXX-XXXX-XXXX-5678 |

Here, the actual credit card numbers have been replaced with masked values (e.g., “XXXX-XXXX-XXXX-1234”). Authorized users can still analyse the data without compromising sensitive information.

b. Data Governance

Data governance is a broader concept that encompasses the policies, processes, and controls implemented to manage, protect, monitor, and improve the discoverability of your organization’s sensitive information, to gain and maintain customer trust and to meet data governance and compliance requirements and regulations. Many of these capabilities are built in and included with your Microsoft Fabric license, while some others require additional licensing from Microsoft Purview.

Within Lakehouse Environment:

- Fabric Lakehouse encompasses policies, processes, and controls for managing, protecting, and improving the discoverability of sensitive information within an organization.

- It includes capabilities built into the Microsoft Fabric license, such as centralized management, security, data lineage, and compliance.

- The Microsoft Fabric admin portal serves as a centralized platform for administrators to manage the overall Fabric estate, including settings, capacities, domains, and workspaces.

- Sensitivity labels from Microsoft Purview Information Protection can be used to tag data manually or automatically within Fabric Lakehouse.

- Lineage visualization helps users understand the flow of data and its impact on downstream items within Fabric Lakehouse.

Within Data Warehouse Environment:

- Data governance within a Data Warehouse involves traditional T-SQL security constructs, object-level security, and securing data for collaboration and consumption.

- Traditional T-SQL security constructs, like logins, roles, and permissions, are used to secure data within the Data Warehouse.

- Object-Level Security involves setting permissions at the object level (e.g., tables, views, procedures) to control data access.

- Securing Data for Collaboration and Consumption is crucial for sharing data externally or using it for reporting and analysis within the Data Warehouse.

- Enterprise-grade governance capabilities, using tools like Microsoft Purview, provide advanced data governance functionalities such as data cataloging, data classification, and data lineage for Data Warehouses.

An overview of the key distinctions between Lakehouse and Data Warehouse:

| Lakehouse | Data warehouse | |

| Type of data | Unstructured, semi-structured, structured | Structured |

| Primary developer persona | Data engineer, data scientist | Data warehouse developer, SQL engineer |

| Primary developer skill set | Spark (Scala, PySpark, Spark SQL, R) | SQL |

| Data organized by | Folders and files, databases, and tables | Databases, schemas, and tables |

| Read operations | Spark, T-SQL | Spark, T-SQL |

| Write operations | Spark (Scala, PySpark, Spark SQL, R) | T-SQL |

| Multi-table transactions | No | Yes |

| Primary development interface | Spark notebooks, Spark job definitions | SQL scripts |

| Security | Row level, table level (when using T-SQL), none for Spark | Object level (table, view, function, stored procedure, etc.), column level, row level, DDL/DML, dynamic data masking |

| Access data via shortcuts | Yes | Yes (indirectly through the Lakehouse) |

| Can be a source for shortcuts | Yes (files and tables) | Yes (tables) |

| Query across items | Yes, query across Lakehouse and Data warehouse tables; query across Lakehouse (including shortcuts using Spark) | Yes, query across Lakehouse and Data warehouse tables |

Use Cases: Lakehouse and Data Warehouse

The choice between a Lakehouse, a Data Warehouse, or a combination of both depends on various factors that define the requirements of your solution. Here are some key use cases and scenarios to consider when making this decision.

- How the data is going to be consumed/used

- Requirements for your application or ETL/ELT

- Skillset of developers

How the data is going to be consumed/used

-

Users consuming through Power BI reports/dashboards

Both Lakehouse and Data Warehouse can serve Power BI with the same direct lake mode semantic model capability. This can be accessed either through import or DirectQuery mode via the SQL Analytics Endpoint of the Lakehouse or the Data Warehouse endpoint.

-

Business users analysing/exploring with ad-hoc T-SQL

Both Lakehouse and Data Warehouse provide SQL read functionality, but determining the best solution depends on various factors.

-

Allowing users to consume with Spark directly on files, including structured, semi-structured, unstructured data and all file types (not just delta tables or delta parquet). This can be for business users, data scientists etc.

Lakehouse is the correct use case for this scenario, since any file format can be stored, and Spark can be used to interact with these files. Data Warehouse allows Spark to read only to the tables, which might be enough but generally, you would not use a Data Warehouse for this scenario.

-

Mix of consumption: PySpark skills from the Data Engineering team who perform the transformation work/data modeling and business users/developers who consume read-only T-SQL queries whether ad hoc directly, cross-database queries to other Lakehouses/Warehouses in Fabric, or in Power BI

Lakehouse would be best for this scenario. If business users primarily consuming read only T-SQL, then they can use the SQL Analytics Endpoint on the Lakehouse for their consumption. Meanwhile, the data engineering team can continue their operations with Spark, ensuring efficient data processing.

There would be no need to use the Data Warehouse, unless there were other requirements/needs that would force the use of the Data Warehouse such as using the warehouse endpoint for third party reporting tools or analytic queries or requiring functionality only available in Data Warehouse.

-

AIML: Real-Time Personalized Recommendations, data can be structured, semi-structured, unstructured including clickstream data, customer behaviour data, transaction data etc.

In this scenario, a Lakehouse would be an ideal solution. The Lakehouse can store diverse types of data involved such as structured, semi-structured and unstructured data. It allows for low-cost storage and the flexibility to use multiple types of analytics from dashboards and visualizations to big data processing, real-time analytics, and machine learning to guide better decisions.

The AIML models can be trained on this diverse dataset to understand customer behaviour patterns and provide real-time recommendations. The models can be continuously updated with new data for more accurate recommendations.

A Data Warehouse would not be suitable in this case. Data warehouses are highly structured and optimized for T-SQL-based analytics and provide a high-performance analysis of structured data.

Requirements for your application or ETL/ELT

Various applications and ETL/ELT processes often demand specific functionalities either from a Lakehouse or a Data Warehouse. Let’s delve into some of the common requirements for these applications and workloads.

- ACID Transaction Compliance

For ACID compatibility, you will need to review the requirements of the solution since utilizing the ACID capability in either the Lakehouse or Data Warehouse; you will need to create a Delta Table. This means there is likely a need to either convert a file into a Delta Table or load directly into one for both components. So, the choice will depend on the other factors for the use cases such as skillset, other requirements, and consumption.

Being ACID compliant allows for the best data integrity and reliability possible by never allowing your data to be in an inconsistent state.

-

Multi-Table Transactions

Multi-table transactions are a way to group changes to multiple tables into a single transaction. This allows you to control the commit or rollback of read and write queries and modify data that is stored in tables using transactions to group changes together.

For multi-table transactions, you will need to use the Data Warehouse over the Lakehouse to perform these transactions. Multi-table transactions are ONLY supported in the Data Warehouse. Lakehouse currently does not support this functionality.

-

Dynamic Data Masking

Dynamic data masking is exclusively supported within the SQL analytics endpoint of the Lakehouse. Other file formats or engines, such as Spark, do not have access to dynamic data masking functionality. Object and row-level security can also be applied, but only through the SQL Analytics Endpoint.

In Data Warehouse, dynamic data masking features are fully supported. This encompasses object-level (tables, views, functions, stored procedures, etc.), column-level, and row-level security, as well as DDL/DML operations. Alongside dynamic data masking, the data warehouse also supports SQL granular permissions, column and row-level security, and audit logs.

Skillset of Developers

Within Lakehouse Environment:

Preferred candidates typically possess Spark expertise, particularly data engineers, data scientists, and professional developers who directly engage with files and/or Delta Tables for ETL/ELT workflows or similar tasks. This involves proficiency in Spark notebooks and Spark job definitions.

Developers/Users with only T-SQL knowledge primarily involves reading curated data accessible to them via Delta Tables through the SQL Analytics Endpoint. This demographic often includes citizen developers and professional developers focused on consumption or analysis tasks.

Within Data Warehouse Environment:

Developers/Users with T-SQL knowledge, usually data warehouse engineers, and SQL developers Preferred while building an ETL/ELT processes and Data Warehousing working with stored procedures, functions, and perform DBA tasks.

On the other hand, individuals with a limited T-SQL proficiency primarily focus on consuming or analysing curated data. These users include citizen developers and professional developers.

Solution Architectures/Designs for Data Infrastructure

Understanding the functionality, feature support, use cases, and differences between the Lakehouse, and Data Warehouse will assist you in building an architecture for different types of solutions.

The goal is to demonstrate architectures best practices using the Lakehouse exclusively, Data Warehouse exclusively, and Lakehouse and Data Warehouse together to provide a comprehensive understanding of different design patterns based on your criteria.

The solution architecture/design will be broken into these categories:

- Medallion architecture

- Lakehouse example architecture

- Data Warehouse example architecture

- Lakehouse and Data Warehouse combined architecture

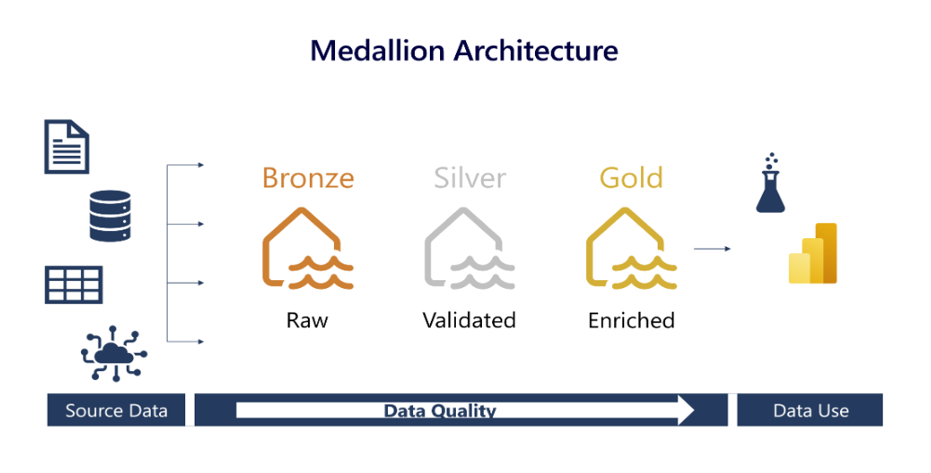

A. Medallion Architecture

The medallion architecture describes a structured series of data layers within the Lakehouse, each representing a distinct level of data quality. Comprising three tiers or zones—bronze (raw), silver (validated), and gold (enriched/curated). Each layer indicates the quality of data stored in the Lakehouse, with higher levels representing higher quality. This architecture ensures a clear understanding of data quality and usability throughout the storage process.

- Bronze: Raw zone. The initial layer that stores the source data in its original format. Typically, this zone experiences minimal user interaction as it primarily serves as the repository for source data.

- Silver: Validated zone. The silver layer where raw data undergoes cleansing and standardization processes. Data within this zone is structured with defined rows and columns, facilitating integration with other enterprise data sources. Transformations at the silver level should be focused on data quality and consistency rather than extensive data modeling.

- Gold: Enriched/Curated zone. The final layer sourced from the silver layer, represents the final stage of data refinement. Data within this zone is deemed “business ready” and aligns with analytical and business requirements, making it suitable for direct utilization in decision-making processes.

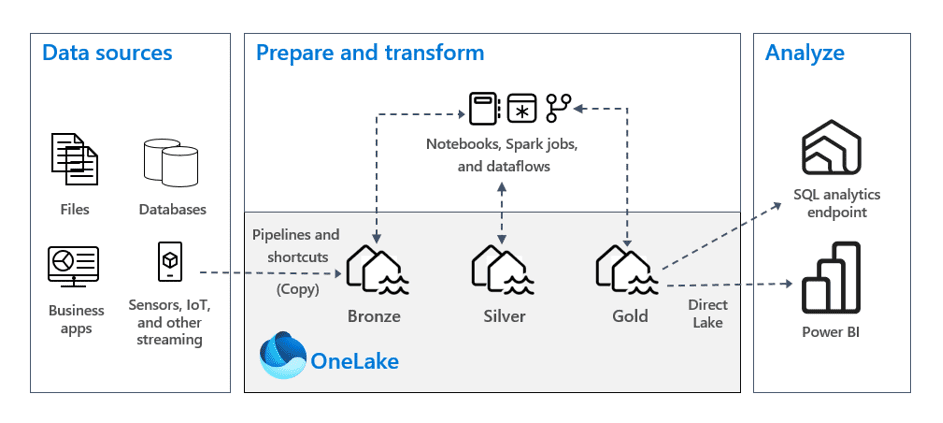

B. Lakehouse Example Architecture

Lakehouse architecture utilizing a medallion architecture framework designed to complement the developer team’s primary skill set, which comprises Spark expertise. There is no need for additional capabilities of the Data Warehouse like multi-table transactions and dynamic data masking. Consumption processes do not mandate the use of the Data Warehouse endpoint, specifically for functionalities required by certain third-party reporting tools. Additionally, there is no need for T-SQL DDL/DML capability, as it is not a requirement for the users or developers.

Data Source

Data sources encompass a wide range of sources from files to streaming data and everything in between. These sources may be located on-premises, within Azure, in other cloud providers’ environments, accessible via shortcuts, or contained within Fabric itself.

Preparation and Transformation

- Ingestion Methods: Utilize pipelines with 200+ connectors, notebooks, dataflows, or spark jobs includes streaming data and file/database connection. Support for on-premises, azure, other cloud providers, and shortcuts like OneLake, ADLS Gen2, Amazon S3, or Dataverse.

-

Transformation/ Data Promotion Methods: Choose from no code, low code, or code options. Spark preferred for complex transformation scenarios and high code option using notebooks or spark job definitions. Using Dataflows can be low code option to perform simple transformations and best for small semantic models or orchestration via Fabric Pipelines.

Lakehouse Zones

- Bronze Lakehouse: Keep data in original format; use Parquet or Delta Parquet where necessary. Utilize shortcuts if compatible.

- Silver Lakehouse: Prefer Delta Tables for enhanced capabilities. This area is to enrich your data through combining sources, transformation, cleansing, etc.

- Gold Lakehouse: Like Silver, utilize Delta Tables. Data is business-ready with star schema, normalized, and applied business logic.

Analysis

- SQL Analytics Endpoint: Privileged users can analyse data through the SQL queries over SQL Analytics endpoint with full SQL, read-only experience, create views and functions to customize and control an access and apply object and row-level security to protect sensitive data.

- Power BI: Users consume data via reports, semantic models, dashboards, etc. Direct lake mode is a new semantic model capability for parquet formatted files allows loading directly from Gold Lakehouse.

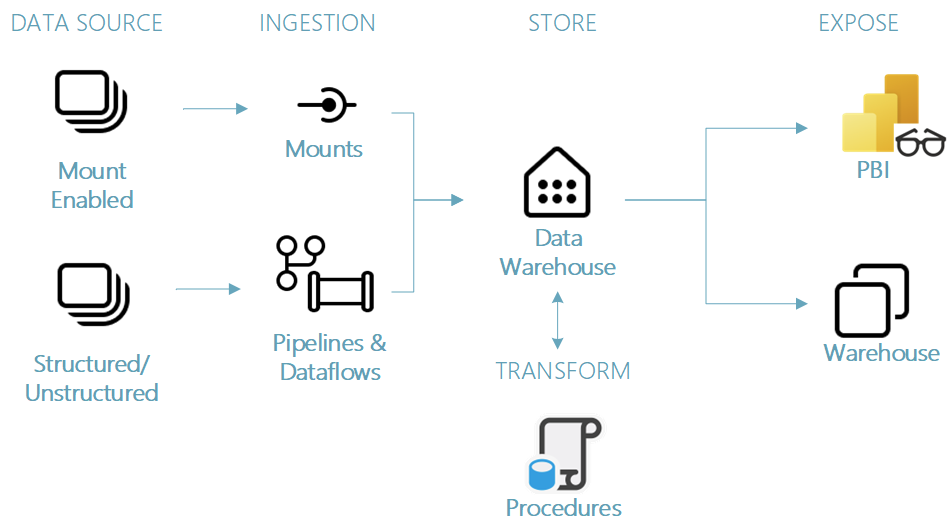

C. Data Warehouse Example Architecture

Data Warehouse architecture primarily works with T-SQL/Data Warehousing skills for data transformation. It’s suitable for handling multi-table transactions and supporting both transactional (OLTP) and analytical (OLAP) workloads. While transactional workloads can utilize a Data Warehouse, Lakehouse architecture may be preferred depending on application requirements. Consumption requires a Data Warehouse endpoint for functionality not available in the Lakehouse SQL endpoint that are required for third-party reporting tools or processes. Additionally, users and developers require T-SQL DDL/DML capabilities, including the ability to modify data post-normalization or transformation.

Data Source

‘Mount Enabled’ refers to shortcuts, allowing data usage without physically copying or moving it. Data sources include OneLake, Azure Data Lake Store Gen2 (ADLS Gen2), Amazon S3, or Dataverse. Structured/Unstructured data encompasses various sources like Azure Data Services, other cloud platforms, and on-premises.

Ingestion Methods

‘Mounts’ are referring to mounting an existing Azure Data Factory to leverage existing infrastructure to load data into Fabric and specifically the Data Warehouse.

Fabric Pipelines offers the ability to ingest data into your warehouse with 200+ native connectors using copy activities or dataflows to land the data with transformations if needed.

Store

- Warehouse Design: Bronze, silver, and gold layers are separate Data Warehouses within their workspace, facilitating security and governance. Alternatively, a single warehouse with schema/table enforcement for different zones, though it may require extensive management.

- Data Transformations: SQL stored procedures are recommended for ETL, orchestrated through Fabric pipelines like ADF.

Expose

- Warehouse: Users or SQL analysts/developers access data for reporting, exploration, or ad hoc analysis, with granular permissions, object-level security, and dynamic data masking. Views and functions customize user experience and access just like a traditional SQL environment.

- Power BI: Users will consume data through reports, semantic models, dashboards, etc., sourced from the gold warehouse. Direct lake mode allows loading parquet files directly from the data lake into Power BI without duplication.

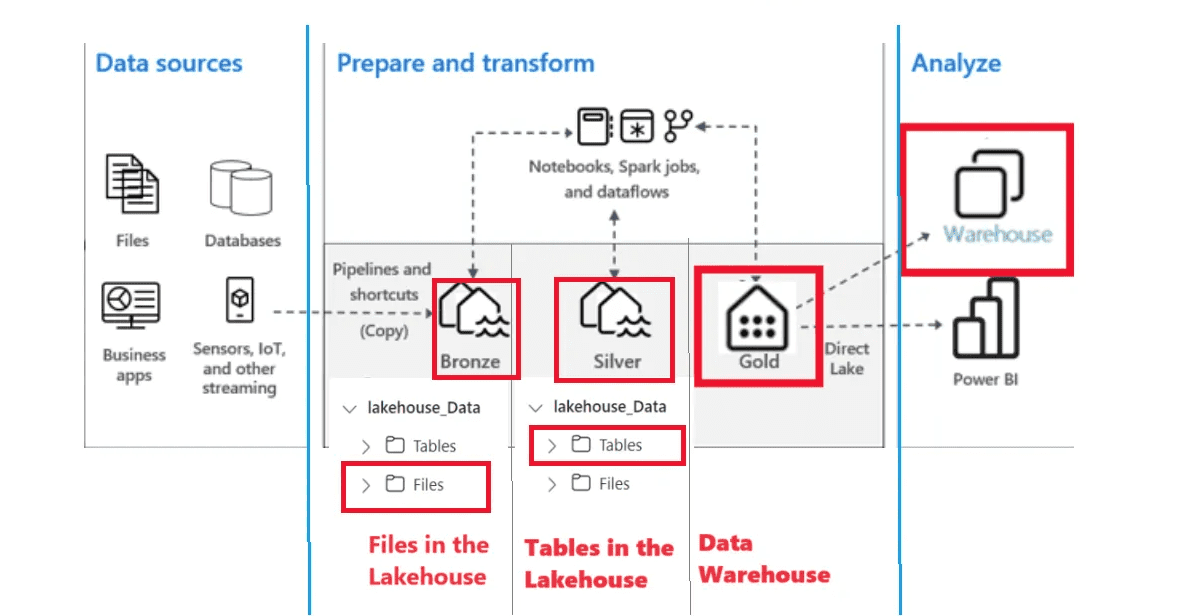

D. Lakehouse and Data Warehouse combined architecture

This integrated architecture combines the Lakehouse and Data Warehouse, offering the best of both. The only differences between this architecture and the Lakehouse architecture are that gold Lakehouse is replaced by a Gold Data Warehouse and consumption through the SQL Analytics Endpoint of the Lakehouse is replaced by the Data Warehouse endpoint. This architecture enables seamless access to the Data Warehouse for users via Power BI or warehouse endpoint. Developers utilize DDL/DML support at the gold layer without compromising performance or duplicating data. Spark handles all ETL/ELT transformations for optimal speed and flexibility, aligning with developer skill sets and ensuring top-notch user experience.

When deciding on combined architecture, consider developers skill set is Spark for data transformation using Spark notebooks. For users needing T-SQL support for DDL/DML, rely on the data warehouse. Users should retain the ability to modify data post-normalization or transformation. Additionally, ensure compatibility with third-party reporting tools or processes via Data Warehouse endpoints.

Conclusion

When working with Microsoft Fabric, it’s crucial to understand the capabilities of its components and your solution requirements. This understanding is key whether you’re designing new solutions or migrating existing ones.

This blog explores two essential components, a Lakehouse, and a Data Warehouse. It explains their various use cases, significant differences, and ideal solution architectures/designs. By fully grasping what they can do, you can skilfully adapt your architecture to meet user needs and handle workload demands. This knowledge allows you to create or modify solutions that are efficient, effective, and customized to meet the demands of your workload requirements.

Blog Author

Saiprasad Parit

Sr. Data Engineer

Intellify Solutions