Build event-driven Workflow in Azure & Fabric Events

Introduction

These days, things move at a rapid pace online, so discovering news and acting quickly is not an option; it’s a necessity.

Whether it is automating the handling of files, handling data processes, or triggering alerts, the ability to respond quickly keeps businesses nimble and competitive.

We are excited to share that Azure and Fabric Events are now widely available. It’s a new, powerful feature in Microsoft Fabric that enables our teams to build workflows and applications that react to events quicker, more predictably, and more smartly.

What is an Azure & fabric event?

Azure and Fabric Events offer the means to hear and react to system changes in Microsoft Fabric and Azure services.

Such events can be pushed to numerous users such as:

- Activator is employed to trigger alerts, flows, or Fabric assets such as notebooks and pipelines.

- Eventstream, to convert events and pass through to downstream sinks like Lakehouse, Eventhouse, or custom destinations.

By utilizing this power, we can automate operations, eliminate manual labor, and enhance how we visualize our data pipelines

Key Features We’re Most Excited About

Fabric’s event-driven architecture is designed to simplify automation, enable real-time responsiveness, and reduce manual intervention across data workflows. Below are the major event types and capabilities that make this possible:

1. Real-Time Event Types Supported

- Fabric supports various system-level and data-level events that can be used to build responsive, automated solutions:

1.1 OneLake Events:

- These events are triggered when files or folders in OneLake (Fabric’s unified data lake) are created, deleted, or renamed.

Example use case: When a new file lands in a data lake folder, trigger a notebook to process it automatically.

1.2 Azure Blob Events:

- The same file-based events are also supported for Azure Blob Storage, enabling seamless integration with external data sources.

Example use case: Detect when an external partner drops data into your blob container and trigger data ingestion flows.

1.3 Job Events:

- These events are raised when a Fabric job (such as a pipeline, notebook, or Spark job) is created, completed, or fails.

Example use case: Send a notification if a job fails or trigger a downstream process once a job completes successfully.

1.3 Workspace Item Events:

- Monitor changes to Fabric workspace items, including datasets, notebooks, pipelines, and other assets.

Example use case: Track when a dataset is updated or when a pipeline is modified, enabling governance or audit workflows.

1.4 Eventstream:

- Acts as a central real-time event processing hub.

You can ingest, filter, transform, and route events to various destinations:

Lakehouse (for storage or reporting)

Eventhouse (for streaming analytics)

Derived streams (for downstream processing)

Custom APIs (for integration with external systems)

Example use case: Stream only “job failed” events to a custom monitoring system or alerting API.

1.5 Activator:

- A powerful feature that lets you define actions that should be triggered when specific events occur.

Supports multiple destination types and tools.

Send real-time Teams or Email notifications

Trigger Power Automate flows for complex business logic

Execute Pipelines or Notebooks in Fabric automatically

Example use case: If a file arrives in a folder, automatically run a notebook, then send a Teams message upon success.

Other Key Features of Microsoft Fabric Events

Microsoft Fabric Events offers powerful capabilities that help you build intelligent, responsive, and efficient event-driven applications. Below are some key features explained in detail:

Event Filtering

In a world where thousands of events can happen across systems every second, event filtering ensures that your application only listens to the ones that actually matter. This means your system stays lean, efficient, and focused. Here’s how it works:

- Event Type

You can filter based on the type of event. For example, you may only want to handle: - File uploads to a storage account

- Deletion of files or records

- Changes in a database or system configuration

This ensures your application doesn’t get overwhelmed with unnecessary data. - Subject

Events often come with a “subject” that identifies the source or related resource. With subject-based filtering, you can: - Listen only to events coming from a specific file path, like /invoices/2025/

- Monitor changes in specific services or modules in your architecture

This helps in targeting the exact part of your system you’re interested in. - User-Defined Properties

You can add your own metadata or tags to events and filter based on that. For example: - Process only events where priority = high

- Ignore events coming from a test environment (env = test)

This level of customization gives you full control over how your event system behaves.

Why it matters: Without filtering, your app might waste resources processing irrelevant events. With it, you’re only reacting to what’s important—improving performance, accuracy, and maintainability.

CI/CD Support

Microsoft Fabric Events is designed to fit seamlessly into modern development workflows using DevOps practices. Here’s what it supports:

- REST APIs: Use simple API calls to configure, manage, and monitor your event workflows programmatically.

- Git Integration: Keep your event configurations and workflows version-controlled alongside your codebase.

- Deployment Pipelines: You can easily include event setup in your CI/CD pipelines, automating testing and deployment of event-driven components just like you would for applications or services.

Why it matters: Developers and DevOps teams can now treat event-driven architectures just like any other part of the application lifecycle—automated, repeatable, and easy to manage.

Global Availability

Fabric Events is now available in more geographic regions, which brings better performance, lower latency, and enhanced data compliance for organizations around the world. Currently supported regions include:

- Germany

- Japan

- France

- South Africa

- Central US

Why it matters: Running Fabric Events closer to your data and users means faster event processing, better user experience, and easier compliance with regional data laws.

Practical Applications in Our Organization

This is how we can start using Azure and Fabric Events within our organization:

1. Automated File Ingestion

When a new data file is sent to OneLake or Azure Blob, an Activator for Fabric can initiate a pipeline that automatically imports, processes, and stores the data — no human intervention required.

2. Job Chaining and Orchestration

Begin a second job as soon as you complete a successful first job. This allows us to develop a seamless workflow that keeps our process completely automated and observed.

3. Application Alerts

Integrate with your own endpoints to alert business apps or dashboards upon the occurrence of specific events.

How to Get Started

Our data engineering and analytics teams can start exploring this feature as of today by taking the following steps:

Step 1. Go to Real-Time in the left-hand navigation of Microsoft Fabric.

Step 2. Choose the Fabric Events page or the Azure Events page.

Step 3. Hover over any event group and click:

3.1 Set Alert (to use Activator)

3.2 Create Eventstream (to use Eventstream)

Step 4. Finish the wizard to configure your event source and destination

How It Works: Real-Time File-Based Pipeline Triggering

We have one of our primary automations use cases using Fabric Events to invoke a pipeline for accepting data when file activity occurs. Here is how it works in the real world:

Step-by-Step Implementation:

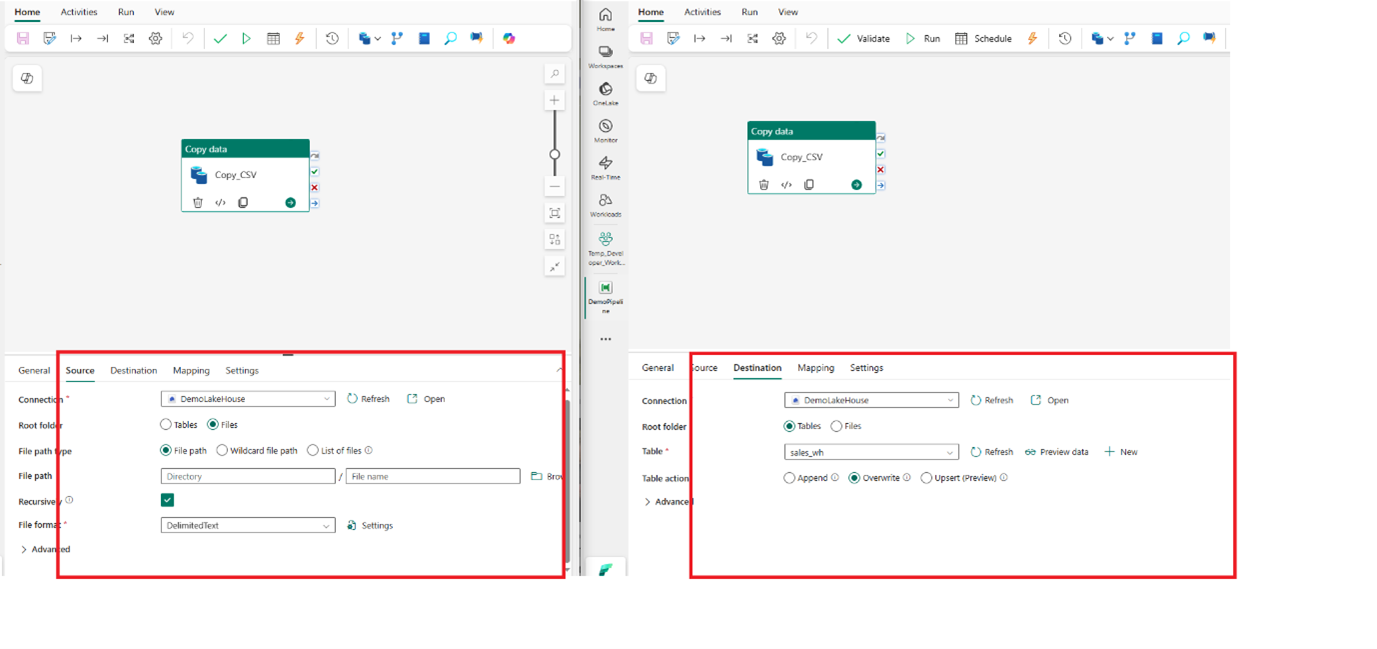

Step 1. Create Pipeline

Create a pipeline to load a CSV File from the Fabric Onelake File folder to the Lakehouse destination.

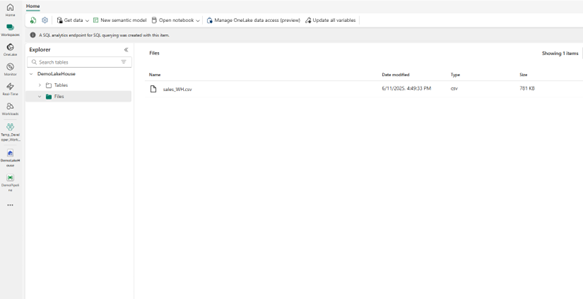

Upload a CSV File

A new CSV file is dropped into a OneLake directory (e.g., /Files/ Sales_WH).

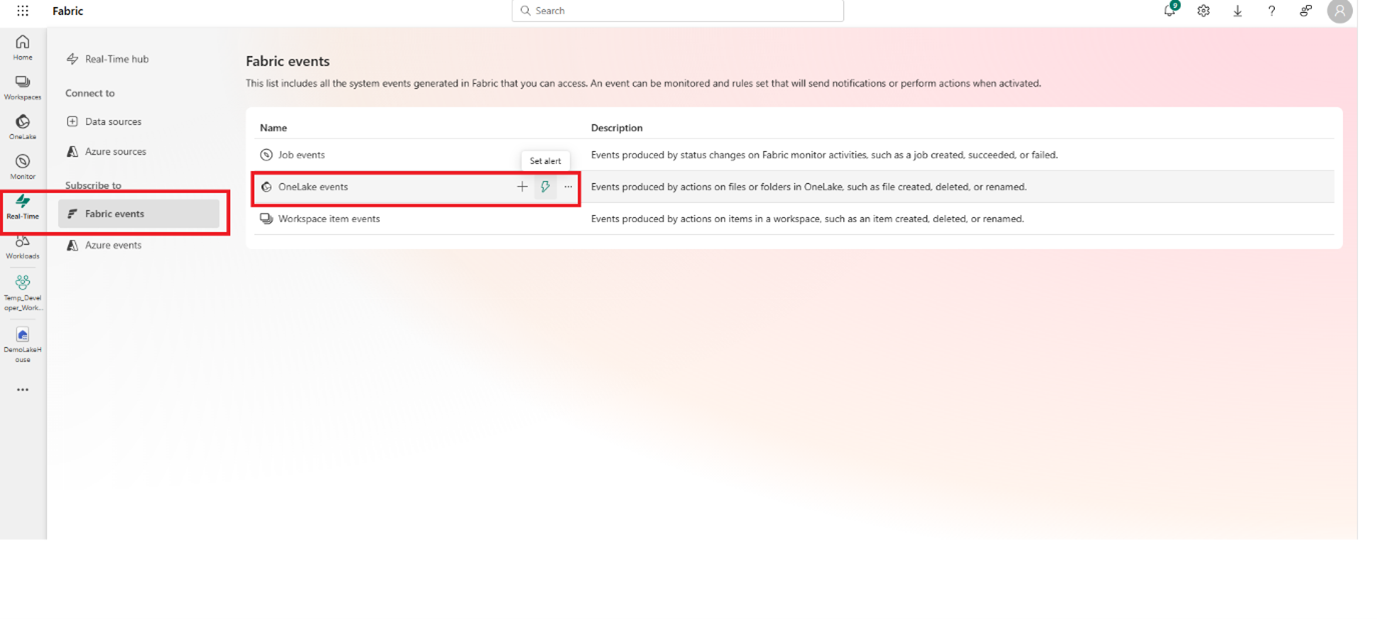

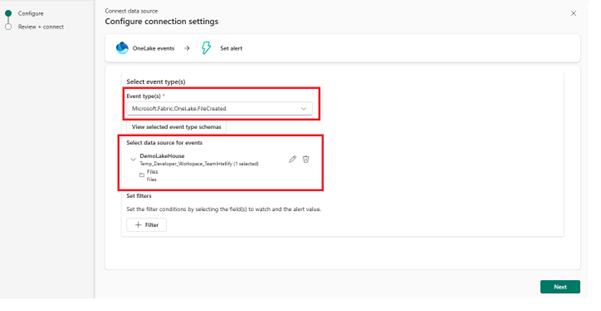

Step 2. Alert Creation and Fabric Event Detection

OneLake event takes place when a file is inserted or created into the folder.

Go to Real Time Hub, select Fabric Event, and then click On alert

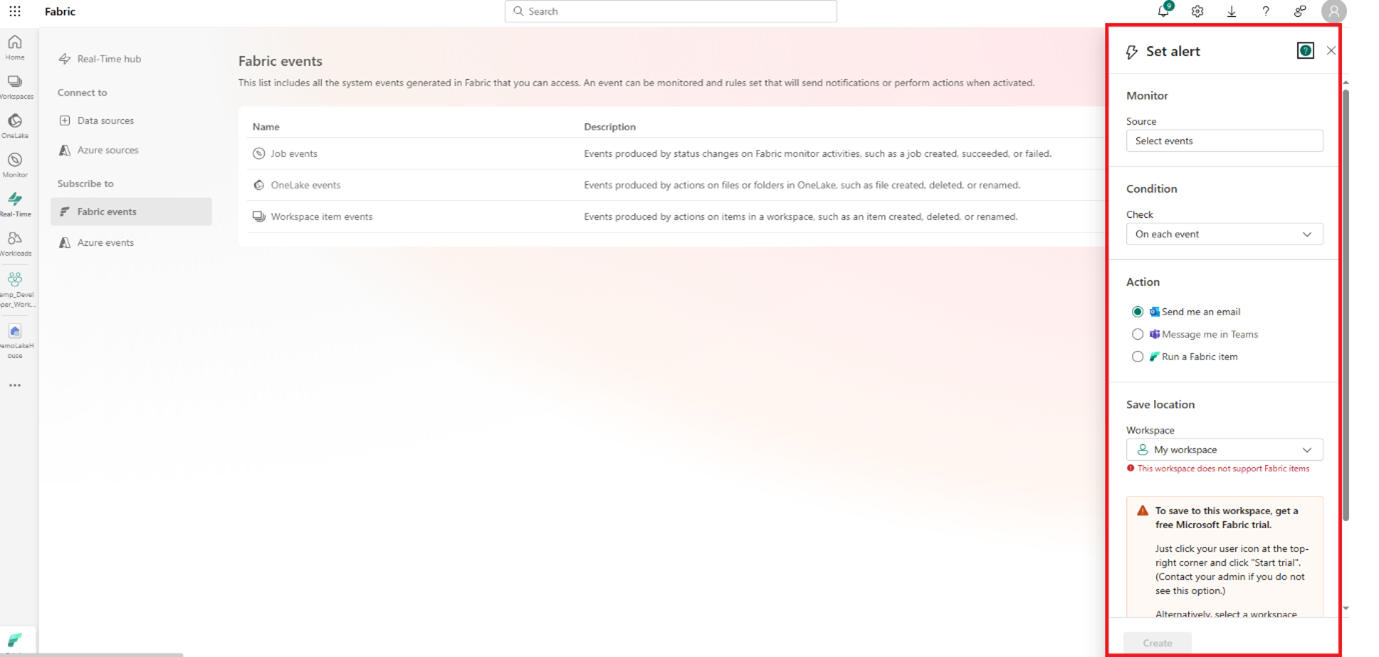

When you click on alert screen pop up as below:

In the Source section, specify the file location where the data is generated or added.

Next, define the EventType as per the requirement—typically, you would use the ‘File Created’ event when the source location is a file folder in the Lakehouse.”

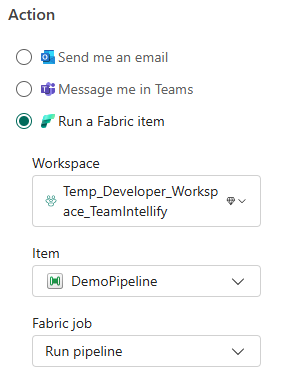

After saving this, go to action and select Run fabric item then add workspace and item that you want to run e.g. Pipeline

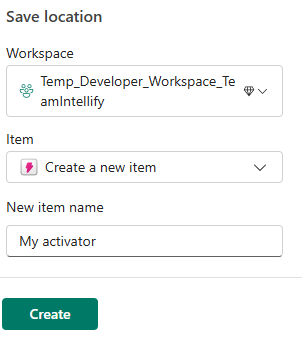

Step 3. Activator Setup

Step 4. Pipeline Trigger

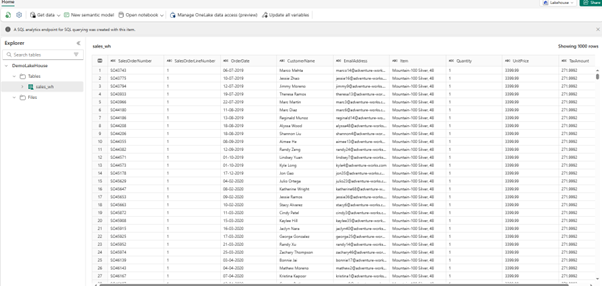

Go to the lakehouse and add a file to the folder. As the File is added, the Activator will call a Fabric pipeline and load data from the file to the table.

Note: We must add a remove file or delete file activity in your pipeline; otherwise, it will continuously load data from the file to the delta table.

Now, check the table and see if the data is available in the table

Confirmation and Logging Failure or success of pipeline runs is logged for monitoring and audit purposes.

This whole process enables files to be automatically and instantly loaded into the Lakehouse with much less human intervention.

Conclusion

Azure and Fabric Events are a key step forward in building smart data systems. They enable organizations to respond immediately to OneLake, Azure Blob, and other Fabric asset changes.

In other words, they can collect data, process, and track what is going on automatically, without needing to do it manually. Whether you are architecting seamless data processes or building system notifications, the combination of Eventstream and Activator provides the power and flexibility required for data work today.

The moment is now opportune for teams to leverage event-driven architecture in Microsoft Fabric. This can enable them to achieve greater levels of agility, efficiency, and insight across the organization.