[vc_row][vc_column width=”3/4″][vc_column_text]

Considering latest surveys and analysis, Industry is experiencing 50-70% probability of selling products and services to their existing customers whereas for new customers it’s likely to be 5-20%. Also, as per recent study, cost of retaining exiting customers is 5 to 8 times less than acquiring new customers. This is one of the main reason many business are very much interested in identifying their Customer Churn.

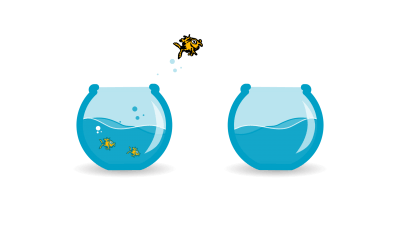

Customer Churn is calculated by no of customer leaving the organisation during a given period.

Lot of analysis is available to find out why customers typically leave and what various actions organisations needs to take. But more challenging part is to identify who are those likely customers and how proactively we can identify them to act upon.

To identify and predict customers, most important element we need is to have a database with data about the previous customers that churned. This data is used to develop a model which identifies customers that have a profile similar to ones that have already left.

The data is typically divided in two parts, one will be used as training set. This will be used to create the model. Another will be the testing set which will be used to evaluate our model accuracy.

As we already know customers from the testing data set who has already left, we can compare the model prediction with the true answers. Confusion Matrix will help us measuring the model accuracy.

There are various machine learning algorithms and tools available to help us including Regressions, decision trees, random forest etc.

As some of the important terms and concepts are already described in our earlier blogs, those are not described here again but as a data scientists, one should generally use various models and baseline the models performance as a measure to compare the prediction accuracy as we progress with more complex and hybrid models and complex algorithms.

Logistic regression is a binary classification technique used to identify whether a customer will stay or leave, a simple Yes or No result whereas Decision Tree can handle numeric data as well. Decision Tree algorithm splits the data into two or more homogeneous sets based on the most significant differentiation in input variables to make a prediction. With each split, into Yes and No, a part of a tree is being generated. As a result, a tree with decision nodes and leaf nodes (which are decisions or classifications) is developed. At the end, a root node is the best predictor.

To extend the decision tree, Random forest is used which uses numerous decision trees to achieve higher prediction accuracy and model stability (Random Forest is an ensemble learning method). Random Forest takes care of both regression and classification tasks. In this method, every tree classifies a data instance based on identified attributes, and the forest chooses the classification that received the most support.

In the case of regression tasks, the average of different trees’ decisions is taken.

As explained in earlier blogs there are some of the common activities to be conducted like –

- Data Collection-Collect all logical data with possible permutation combinations. In this case, collect all the data having historical customer churn information. We need to make sure, the data consists of appropriate distribution of churned and non-churned customers with various fair distribution across various dependent variables.

- Data Processing – We need to make sure the data is Complete, clean and accurate. In this step data is processed and all the missing values are filled using various techniques either with mean, median etc. If data set has marked only those records where customer churned and rest are blank, we may simply fill those by “No” or 0.

- EDA – Exploratory Data Analysis – Identify correlation between the available data points and churn using

- NFD – Numerical features Distribution like discount offered, no of years in association etc.

- CFD – Categorical feature distribution like married, salaried or business etc.

- Identifying and encoding features – to encode the categorical features to numeric quantities. There are various techniques used like Replacing values, Encoding labels, One-Hot encoding, Binary encoding, Backward difference encoding to name a few.

- Training and Testing the Model

- Evaluating and Understanding Model interpretability using various techniques like Confusion Matrix, F1 Score, Gain and Lift Charts, K-S Chart, AUC – ROC, Log Loss, Gini Coefficient, Concordant – Discordant Ratio, Root Mean Squared Error or simply by Cross Validation

These models can be built using various technologies like Microsoft Azure Machine Learning, Python, R etc. Please Contact Us to know in more details[/vc_column_text][/vc_column][vc_column width=”1/4″][stm_sidebar sidebar=”527″][/vc_column][/vc_row][vc_row][vc_column][stm_post_bottom][stm_post_about_author][stm_post_comments][/vc_column][/vc_row]