AutoML in Microsoft Fabric – Accelerate Machine Learning with a Unified Data Platform

Table of Content

1. Introduction

2. AutoML in Microsoft Fabric- Overview

3. Core capabilities and supported scenarios

4. How the workflow plays out in Fabric

5. Key advantages for data engineering & analytics teams

6. Important considerations, limitations and best practices

7. A practical use-case scenario

8. Integration with your data platform / medallion architecture

9. Conclusion

Introduction

In today’s analytics-driven environment, organisations are under increasing pressure to translate raw data into predictions, optimisation and business impact. Yet the machine-learning lifecycle remains complex: data preparation, feature engineering, model selection, hyperparameter tuning, deployment and monitoring all require substantial effort and skill. Automated Machine Learning (AutoML) aims to simplify and scale that process. In this blog we’ll explore what AutoML means, how it is supported in Microsoft Fabric, how to get the most out of it (especially for teams oriented around data engineering and analytics), and what you should watch out for to maximise value.

AutoML in Microsoft Fabric- Overview

AutoML refers to the automation of the end-to-end machine learning process — from model selection and feature engineering to hyperparameter optimisation and validation.

Instead of manually trying different algorithms and configurations, AutoML evaluates multiple models automatically and selects the best-performing one based on the problem type and data.

Why AutoML Matters

- Accelerates time to insight: Reduces model-building cycles from days to hours.

- Broadens accessibility: Enables analysts and data engineers to create models without deep ML expertise.

- Ensures consistency and quality: Automates best practices like train/test splits and experiment tracking.

- Improves scalability: Handles multiple datasets and trials simultaneously for faster experimentation.

- Enhances collaboration: Standardises workflows across teams using shared datasets and governed environments.

AutoML in Microsoft Fabric

Microsoft Fabric integrates AutoML directly within its Data Science workload, combining it with other Fabric components like Lakehouse, Notebooks, MLflow, and Data Pipelines.

This end-to-end integration allows teams to prepare data, train models, and operationalise predictions — all within a single unified platform.

Key highlights:

- Powered by FLAML (Fast and Lightweight AutoML) — an open-source library optimised for efficiency.

- Offers both low-code (UI-based) and code-first (notebook) experiences.

- Supports classification, regression, and forecasting tasks.

- Embeds model tracking, versioning, and deployment via MLflow.

- Seamlessly connects to your Lakehouse for direct data access and storage.

Core Capabilties and Supported Scenarios

Here are key capabilities of AutoML in Fabric and the kind of scenarios it supports:

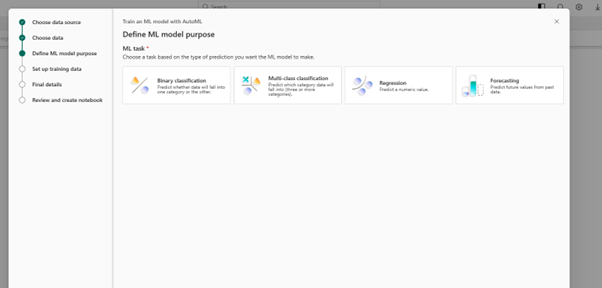

Supported ML task types

- Classification (binary / multi‐class) – e.g., fraud detection, customer segmentation.

- Regression – predicting numeric outputs (e.g., sales, price).

- Forecasting / time-series – predicting future values based on history.

Key capabilities

- Auto-featurization: The system can help with feature engineering (e.g., handling missing values, encoding, transformation) as part of the process.

- Multiple “modes” of AutoML: In the low-code UI you can select modes like Quick Prototype, Interpretable Mode, Best Fit, Custom. Each mode makes trade-offs between speed, interpretability and performance.

- Automatic splitting of training/test sets, appropriate for the task type.

- Experiment tracking and model registry: Via MLflow integration, you can compare runs, visualise model/hyperparameter performance and record final models.

- Seamless data ecosystem integration: You can load data from your Lakehouse into Spark/Pandas, run AutoML, register the model, and then score new data — all within Fabric. (This is a major plus.)

How the workflow plays out in Microsoft Fabric

Here’s a high-level workflow for using AutoML in Microsoft Fabric, adapted to both low-code and code-first paths:

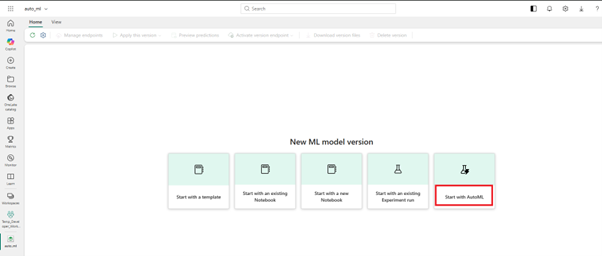

Low-code path

- In Fabric Data Science workspace, select the low-code AutoML wizard.

2. Choose your data source (a table or file in the Lakehouse).

3. Specify the ML task type (classification, regression, forecasting).

4. Choose a mode: Quick Prototype, Interpretable, Best Fit or Custom.

5. The wizard generates a pre-configured notebook and launches the AutoML trial.

6. Monitor experiment runs and metrics, review feature importance and model performance.

7. Select the best model, register it, then integrate into your pipeline for scoring and deployment.

Key Advantages for Data Engineering & Analytics Teams

Given the typical profile of teams working with Spark, Delta Lake, medallion architectures (bronze/silver/gold) the advantages of AutoML in Fabric become quite compelling:

- Platform consolidation: You already work within Fabric; AutoML inside the same platform avoids tool fragmentation.

- Lakehouse-native: Your data (bronze/silver/gold) lives in the Lakehouse; AutoML can pull directly from it, minimizing data movement and friction.

- Faster time-to-value: With repetitive steps (algorithm tasting, hyper-tuning) automated, your engineering team can focus more on feature engineering, business logic and deployment.

- Broader participation: Analysts and data engineers (not just expert data scientists) can contribute to model building via low-code UI, thus increasing capability across the organisation.

- Best-practice baked-in: The system handles splits, logging, tracking and versioning, which increases model quality, governance and reproducibility.

- Scalability: Since you can run in Spark environment and use Fabric’s compute, you can handle large datasets and leverage your existing architecture for modelling.

- Operationalisation ease: After the model is built, you can embed scoring in your pipelines (e.g., after your gold layer load) and feed results to dashboards, alerts, or other actions.

Important Considerations, Limitations and Best Practices

While AutoML in Fabric is strong, it’s not a magic wand — and using it smartly will determine how much value you get.

Considerations & limitations

- It is currently in preview, meaning features may change and support may be limited.

- The quality of the model still fundamentally depends on data quality, relevance, labelling and features — bad input data will yield poor outputs.

- Feature engineering remains important: although AutoML helps, domain-knowledge based features often make a big difference.

- There’s a trade-off: “Best Fit” modes may deliver highest accuracy but may also produce models that are harder to explain or deploy. Conversely “Interpretable” mode may restrict complexity.

- Operationalisation is still required: you still need to version models, monitor drift, schedule retraining, integrate into pipelines, handle data changes. AutoML doesn’t remove that.

- For very complex or custom modelling workflows (deep learning, computer vision, streaming predictions) you may still require full-custom frameworks or platforms like Azure Machine Learning. Community commentary notes Fabric’s Data Science is newer and more oriented to integrated but lighter workflows.

Best practices

- Ensure your data foundation is solid: Use your medallion architecture (bronze → silver → gold) to produce a curated modelling dataset with clean features and target labels.

- Define your business objective clearly: Know what you’re predicting, what metric matters (AUC, RMSE, recall, business value), what timeframe.

- Select an appropriate mode: For exploration you might pick Quick Prototype; for production you might pick Best Fit or Interpretable depending on stakeholder needs.

- Monitor experiments and feature importance: Don’t just accept the best model blindly — check feature importance, ensure no odd proxies, ensure fairness. Use the flaml.visualization capabilities.

- Embed model into your pipeline: After model training, integrate scoring as part of your data pipeline (e.g., after gold layer refresh).

- Version models and data: Use model registry, snapshot datasets, track hyperparameters and metadata via MLflow.

- Plan for retraining and drift: Schedule model refresh when significant new data arrives or performance degrades.

- Governance and explainability: Ensure business users understand the model, its assumptions, limitations, and error margins.

A Practical Use-Case Scenario

Here’s how you might use AutoML in Fabric in your environment:

- Suppose you have built your gold layer from your POS / CRM data (with 220k+ individuals and ~31 features) and you want to predict “likelihood to donate in next quarter”.

- Steps:

- Load the gold dataset into a Fabric notebook (either Spark DataFrame or convert to pandas-on-spark).

- Choose “binary classification” as your task.

- Decide to use the low-code wizard for rapid prototyping (or code-first for more control). Specify the target column, define mode (e.g., Interpretable for stakeholder transparency).

- Launch the AutoML trial — the system will explore algorithms, hyperparameters, log experiment runs.

- Review results: inspect which model performed best, check feature importances, choose final model.

- Register the model via model registry, then embed scoring logic in your pipeline: each new batch of donor data gets scored, results written back into your lakehouse and surfaced in dashboards.

- Monitor accuracy / performance over time (e.g., quarterly) and schedule retraining when needed.

- This workflow enables you to go from data engineer’s gold layer → ML model → operational scoring → business outcome (donor prediction) within one ecosystem.

Integration with your Data Platform/ Medallion Architecture

Given your expertise in Spark, PySpark, data pipelines, medallion architecture and Microsoft Fabric, here’s how AutoML fits:

- Data ingestion & transformation (bronze → silver → gold): You already build robust pipelines and curate data into a gold layer that is ready for modelling.

- Model training layer: Use a Fabric notebook attached to the lakehouse to access your gold dataset; then use AutoML for model selection/training.

- Model deployment/serving: After training, you can embed scoring into your existing pipeline architecture (for example a daily or weekly job that scores new data and writes predictions back into the gold or a “serving” layer).

- Monitoring & governance: Leverage Fabric’s integrated tracking (MLflow, experiment metadata), version control, model registry, and pipeline orchestration.

- Iteration & refresh: Your medallion architecture supports iteration; you can incorporate model output into new datasets, retrain, update features, refine pipelines.

- Stakeholder collaboration: Analysts and business users can use the low-code UI to launch experiments; your data engineering foundation supports them; business users consume predictions via dashboards (e.g., Power BI).

Conclusion

AutoML within Microsoft Fabric is a compelling capability: it enables organisations to accelerate predictive modelling, work within a unified data and analytics platform, and better leverage the data engineering and analytics investments you’ve already made. For teams with strong foundations in Spark, lakehouses, medallion architectures and analytics pipelines, Fabric’s AutoML delivers both productivity and scale.

That said, success still depends on good data, clear objectives, integration into pipelines, governance and monitoring. AutoML doesn’t remove the need for engineering, domain knowledge and thoughtful deployment — it amplifies it.

If you’re working in Fabric already (and given your stack and expertise) I recommend piloting AutoML on a well-scoped outcome, measuring the reduction in cycle time, verifying model performance and impact, and then expanding into more use-cases.

This approach helps you move from raw data to actionable predictive insights in a streamlined, governed and scalable way — using the unified platform of Microsoft Fabric.

Blog Author

Anjali Deokar

Data Engineer

Intellify Solutions